Do LLMs or MT Engines Perform Translation Better?

Welocalize Reveals Findings

Welocalize Reveals Findings on LLMs vs. MT Engines for Translation Quality

Generative AI and Large Language Models (LLMs) are expected to disrupt content services industries and professions in several ways, including putting the ability to generate multilingual content into the hands of content authors, streamlining workflows, and performing translations.

Welocalize, a pioneer of tech enablement within language services, is monitoring these forces. Language services companies have used AI to translate content for decades, and understanding how LLMs compare to that technology will help inform how the multilingual services landscape will evolve.

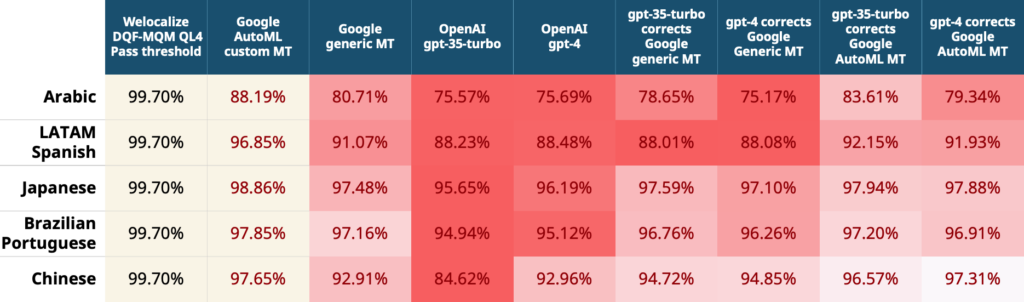

In a recent study, we compared the performance of eight different LLMs and MT (Machine Translation) workflow variants, including a commercial MT system trained by Welocalize and currently in production. We analyzed the quality of translations for customer support content from English into five target languages, including Arabic, Chinese, Japanese, and Spanish.

According to our findings, the custom NMT (neural machine translation) models outperformed all others, both with ‘pure LLM’ output and those combining NMT and LLMs. However, it is worth noting that the output from both the LLM-augmented workflows as well as the ‘pure LLM prompts’ came very close to meeting a high industry standard quality level threshold, sometimes differing by mere tenths of a percentage.

“It is particularly impressive that more challenging target languages like Arabic, Chinese, and Japanese saw promising results,” comments Elaine O’Curran, Senior AI Program Manager at Welocalize.

Although LLMs like GPT-4 may not yet quite match the raw translation performance of highly trained NMT engines, they exhibit impressive proximity to achieving similar results.

As LLMs become fine-tuned and work their way into the corporate IT stack, their ability to achieve desired translation results with lighter prompting and minimal task-specific training will be a compelling alternative.

“It is easy to imagine a future where LLMs outperform NMT, especially for specific applications, content types, or use cases. We will continue to compare and analyze their performance in the coming months,” adds O’Curran. “It will also be interesting to see the performance of customized LLMs. Similar to MTs, the idea is to fine-tune the model for a specific context, domain, task, or customer requirement to enhance their ability to provide more accurate translations for different use cases.”

Select results from Welocalize’s evaluation of translations by trained MT, generic MT, and LLMs. The darker the red in the cell, the further the translation was from the quality pass threshold. Source word count: 5,000.

Table Notes

Google AutoML = Custom MT

Google Translate = Generic MT

Generic MT engines such as Google Translate, Microsoft Translate, and Amazon Translate are not trained on data for a particular domain or topic. Custom MT engines are trained on data from specific domains or customers.

DQF-MQM = Dynamic Quality Framework – Multidimensional Quality Metrics

Quality Level 4 = High quality (human translation quality)

The pass threshold is based on the quality level.

Each error is assigned a value based on the error severity, and the quality score is calculated based on the number and severity of errors found in the translation.

Customizing for Greater Accuracy

Customizing LLMs involves training the model on specific domain-specific or task-specific data to improve its performance in a particular area. Here’s how and why fine-tuning LLMs can increase their ability to provide more accurate translations:

- Domain-specific knowledge: By training LLMs with data from a specific domain or industry, such as legal, medical, or technical content, the model becomes more familiar with the vocabulary, terminology, and context related to that domain. This enables the LLM to generate more accurate translations within that specific area of expertise.

- Task-specific training: LLMs can be fine-tuned with task-specific data to improve their performance for particular translation tasks. For example, if the goal is to enhance translations of customer support content, the LLM can be trained on a dataset of similar customer support conversations. This allows the model to learn specific patterns, sentence structures, and phrases commonly used in such conversations, leading to more accurate translations in that specific context.

- Adaptation to company-specific data: Training LLMs with company-specific data, such as previously translated content or in-house glossaries, helps the model align with the organization’s preferred terminology, style, and tone. This customization ensures that the LLM generates translations that are consistent with the company’s brand voice and requirements.

- Improved context awareness: LLMs have a strong ability to understand and generate text based on context. Fine-tuning allows the model to become more context-aware in specific translation scenarios. For instance, fine-tuning can be performed to enhance the LLM’s understanding of idiomatic expressions, cultural nuances, or specific regional variations, resulting in more accurate and culturally appropriate translations.

- Reduced bias: Fine-tuning LLMs provides an opportunity to address biases that may exist in the model’s default training data. By carefully curating and balancing the data, biases can be mitigated, leading to more fair and unbiased translations.

Moving Multilingual Content Generation Upstream

The integration of LLMs into content tools and workflows could reconfigure the translation industry. Companies will be able to produce content simultaneously in multiple languages, streamlining their processes and increasing efficiency.

LLMs represent a force of potential disruption within the translation industry. As they continue to evolve and become more accurate, this will lead to an uptick in automation and push translation and localization upstream in the content supply chain.

At Welocalize, we’re driving the future of AI in global content. Are you ready to leverage the power of LLMs and MT Engines? Connect with us here to streamline your localization and translation processes and effectively reach your global audiences. Discover, innovate, and translate more with Welocalize.